Narzędzia użytkownika

Narzędzia witryny

Pasek boczny

en:statpqpl:survpl:phcoxpl:werpl

Model verification

- Statistical significance of particular variables in the model (significance of the odds ratio)

On the basis of the coefficient and its error of estimation we can infer if the independent variable for which the coefficient was estimated has a significant effect on the dependent variable. For that purpose we use Wald test.

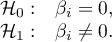

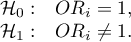

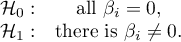

Hypotheses:

or, equivalently:

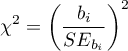

The Wald test statistics is calculated according to the formula:

The statistic asymptotically (for large sizes) has the Chi-square distribution with  degree of freedom.

degree of freedom.

The p-value, designated on the basis of the test statistic, is compared with the significance level  :

:

- The quality of the constructed model

A good model should fulfill two basic conditions: it should fit well and be possibly simple. The quality of Cox proportional hazard model can be evaluated with a few general measures based on:

- the maximum value of likelihood function of a full model (with all variables),

- the maximum value of likelihood function of a full model (with all variables),

- the maximum value of the likelihood function of a model which only contains one free word,

- the maximum value of the likelihood function of a model which only contains one free word,

- the observed number of failure events.

- the observed number of failure events.

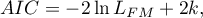

- Information criteria are based on the information entropy carried by the model (model insecurity), i.e. they evaluate the lost information when a given model is used to describe the studied phenomenon. We should, then, choose the model with the minimum value of a given information criterion.

,

,  , and

, and  is a kind of a compromise between the good fit and complexity. The second element of the sum in formulas for information criteria (the so-called penalty function) measures the simplicity of the model. That depends on the number of parameters (

is a kind of a compromise between the good fit and complexity. The second element of the sum in formulas for information criteria (the so-called penalty function) measures the simplicity of the model. That depends on the number of parameters ( ) in the model and the number of complete observations (

) in the model and the number of complete observations ( ). In both cases the element grows with the increase of the number of parameters and the growth is the faster the smaller the number of observations.

). In both cases the element grows with the increase of the number of parameters and the growth is the faster the smaller the number of observations.

The information criterion, however, is not an absolute measure, i.e. if all the compared models do not describe reality well, there is no use looking for a warning in the information criterion.

- Akaike information criterion

It is an asymptomatic criterion, appropriate for large sample sizes.

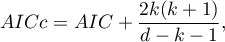

- Corrected Akaike information criterion

Because the correction of the Akaike information criterion concerns the sample size (the number of failure events) it is the recommended measure (also for smaller sizes).

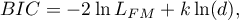

- Bayesian information criterion or Schwarz criterion

Just like the corrected Akaike criterion it takes into account the sample size (the number of failure events), Volinsky and Raftery (2000)1).

- Pseudo R

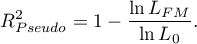

- the so-called McFadden R

- the so-called McFadden R is a goodness of fit measure of the model (an equivalent of the coefficient of multiple determination

is a goodness of fit measure of the model (an equivalent of the coefficient of multiple determination  defined for multiple linear regression).

defined for multiple linear regression).

The value of that coefficient falls within the range of  , where values close to 1 mean excellent goodness of fit of the model,

, where values close to 1 mean excellent goodness of fit of the model,  – a complete lack of fit. Coefficient

– a complete lack of fit. Coefficient  is calculated according to the formula:

is calculated according to the formula:

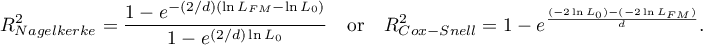

As coefficient  does not assume value 1 and is sensitive to the amount of variables in the model, its corrected value is calculated:

does not assume value 1 and is sensitive to the amount of variables in the model, its corrected value is calculated:

- Statistical significance of all variables in the model

The basic tool for the evaluation of the significance of all variables in the model is the Likelihood Ratio test. The test verifies the hypothesis:

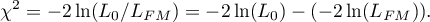

The test statistic has the form presented below:

The statistic asymptotically (for large sizes) has the Chi-square distribution with  degrees of freedom.

degrees of freedom.

The p-value, designated on the basis of the test statistic, is compared with the significance level  :

:

- AUC - area under the ROC curve - The ROC curve – constructed based on information about the occurrence or absence of an event and the combination of independent variables and model parameters – allows us to assess the ability of the built PH Cox regression model to classify cases into two groups: (1–event) and (0–no event). The resulting curve, and in particular the area under it, illustrates the classification quality of the model. When the ROC curve coincides with the diagonal

, the decision to assign a case to the selected class (1) or (0) made on the basis of the model is as good as randomly allocating the cases under study to these groups. The classification quality of the model is good when the curve is well above the diagonal

, the decision to assign a case to the selected class (1) or (0) made on the basis of the model is as good as randomly allocating the cases under study to these groups. The classification quality of the model is good when the curve is well above the diagonal  , that is, when the area under the ROC curve is much larger than the area under the straight line

, that is, when the area under the ROC curve is much larger than the area under the straight line  , thus larger than

, thus larger than

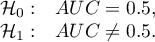

Hypotheses:

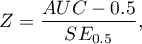

The test statistic has the form:

where:

- field error.

- field error.

The statistic  has asymptotically (for large numbers) a normal distribution.

has asymptotically (for large numbers) a normal distribution.

The p-value, designated on the basis of the test statistic, is compared with the significance level  :

:

In addition, a proposed cut-off point value for the combination of independent variables and model parameters is given for the ROC curve.

EXAMPLE cont. (remissionLeukemia.pqs file)

1)

Volinsky C.T., Raftery A.E. (2000) , Bayesian information criterion for censored survival models. Biometrics, 56(1):256–262

en/statpqpl/survpl/phcoxpl/werpl.txt · ostatnio zmienione: 2022/02/16 10:35 przez admin

Narzędzia strony

Wszystkie treści w tym wiki, którym nie przyporządkowano licencji, podlegają licencji: CC Attribution-Noncommercial-Share Alike 4.0 International