Narzędzia użytkownika

Narzędzia witryny

Jesteś tutaj: PQStat - Baza Wiedzy » PQStat Knowledge Base » PQStat - Statistic » Hypothesis testing

Pasek boczny

en:statpqpl:hipotezypl

Hypothesis testing

he process of generalization of the results obtained from the sample for the whole population is divided into 2 basic parts:

- estimation

estimating values of the parameters of the population on the basis of the statistical sample,

estimating values of the parameters of the population on the basis of the statistical sample, - verification of statistical hypotheses

testing some specific assumptions formulated for the parameters of the general population on the basis of sample results.

testing some specific assumptions formulated for the parameters of the general population on the basis of sample results.

Point and interval estimation

In practice, we usually do not know the parameters (characteristics) of the whole population. There is only a sample chosen from the population. Point estimators are the characteristics obtained from a random sample. The exactness of the estimator is defined by its standard error. The real parameters of population are in the area of the indicated point estimator. For example, the population parameter arithmetic mean  is in the area of the estimator from the sample which is

is in the area of the estimator from the sample which is  .

.

If you know the estimators of the sample and their theoretical distributions, you can estimate values of the population parameters with the confidence level ( ) defined in advance. This process is called interval estimation, the interval: confidence interval, and

) defined in advance. This process is called interval estimation, the interval: confidence interval, and  is called a significance level.

is called a significance level.

The most popular significance level comes to 0.05, 0.01 or 0.001.

2022/02/09 12:56

Verification of statistical hypotheses

To verify a statistical hypotheses, follow several steps:

- The 1st step: Make a hypotheses, which can be verified by means of statistical tests.

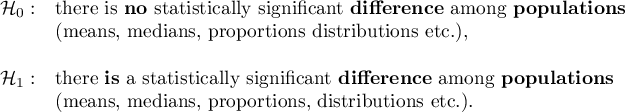

Each statistical test gives you a general form of the null hypothesis  and the alternative one

and the alternative one  :

:

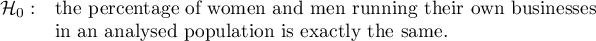

Researcher must formulate the hypotheses in the way, that it is compatible with the reality and statistical test requirements, for example:

If you do not know, which percentage (men or women) in an analysed population might be greater, the alternative hypothesis should be two-sided. It means you should not assume the direction:

It may happen (but very rarely) that you are sure you know the direction in an alternative hypothesis. In this case you can use one-sided alternative hypothesis.

- The 2nd step: Verify which of the hypotheses

or

or  is more probable. Depending on the kind of an analysis and a type of variables you should choose an appropriate statistical test.

is more probable. Depending on the kind of an analysis and a type of variables you should choose an appropriate statistical test.

Note 1

Note, that choosing a statistical test means mainly choosing an appropriate measurement scale (interval, ordinal, nominal scale) which is represented by the data you want to analyse. It is also connected with choosing the analysis model (dependent or independent)

Measurements of the given feature are called dependent (paired), when they are made a couple of times for the same objects. When measurements of the given feature are performed on the objects which belong to different groups, these groups are called independent (unpaired) measurements.

Some examples of researches in dependent groups:

Examining a body mass of patients before and after a slimming diet, examining reaction on the stimulus within the same group of objects but in two different conditions (for example - at night and during the day), examining the compatibility of evaluating of credit capacity calculated by two different banks but for the same group of clients etc.

Some examples of researches in independent groups:

Examining a body mass in a group of healthy patients and ill ones, testing effectiveness of fertilising several different kinds of fertilisers, testing gross domestic product (GDP) sizes for the several countries etc.

Note 2

A graph which is included in the ''Wizard'' window makes the choice of an appropriate statistical test easier.

Test statistic of the selected test calculated according to its formula is connected with the adequate theoretical distribution.

![LaTeX \psset{xunit=1.25cm,yunit=10cm}

\begin{pspicture}(-5,-0.1)(5,.5)

\psline{->}(-4,0)(4.5,0)

\psTDist[linecolor=green,nue=4]{-4}{4}

\pscustom[fillstyle=solid,fillcolor=cyan!30]{%

\psTDist[linewidth=1pt,nue=4]{-4}{-2.776445}%

\psline(-2.776445,0)(-4,0)}

\pscustom[fillstyle=solid,fillcolor=cyan!30]{%

\psline(2.776445,0)(2.776445,0)%

\psTDist[linewidth=1pt,nue=4]{2.776445}{4}%

\psline(4,0)(2.776445,0)}

\rput(-3.6,0.2){$\alpha/2$}

\psline{->}(-3.6,0.15)(-3.1,0.04)

\rput(3.6,0.2){$\alpha/2$}

\psline{->}(3.6,0.15)(3,0.04)

\rput(1,0.5){$1-\alpha$}

\psline{->}(1,0.46)(0.55,0.35)

\rput(2.5,-0.04){value of test statistics}

\end{pspicture}](/lib/exe/fetch.php?media=wiki:latex:/imgf35633d473b0ff2a1622818386fd1f3b.png)

The application calculates a value of test statistics and also a p-value for this statistics (a part of the area under a curve which is adequate to the value of the test statistics). The  value enables you to choose a more probable hypothesis (null or alternative). But you always need to assume if a null hypothesis is the right one, and all the proofs gathered as a data are supposed to supply you with the enough number of counterarguments to the hypothesis:

value enables you to choose a more probable hypothesis (null or alternative). But you always need to assume if a null hypothesis is the right one, and all the proofs gathered as a data are supposed to supply you with the enough number of counterarguments to the hypothesis:

There is usually chosen significance level  , accepting that for 5 % of the situations we will reject a null hypothesis if there is the right one. In specific cases you can choose other significance level for example 0.01 or 0.001.

, accepting that for 5 % of the situations we will reject a null hypothesis if there is the right one. In specific cases you can choose other significance level for example 0.01 or 0.001.

Note

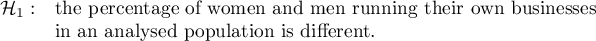

Note, that a statistical test may not be compatible with the reality in two cases:

We may make two kinds of mistakes:

= 1st type of error (probability of rejecting hypothesis

= 1st type of error (probability of rejecting hypothesis  , when it is the right one),

, when it is the right one),

= 2nd type of error (probability of accepting hypothesis

= 2nd type of error (probability of accepting hypothesis  , when it is the wrong one).

, when it is the wrong one).

Values  and

and  are connected with each other. The approved practice is to assume the significance level in advance

are connected with each other. The approved practice is to assume the significance level in advance  and minimalization

and minimalization  by decreasing a sample size.

by decreasing a sample size.

- The 3rd step: Description of results of hypotheses verification.

2022/02/09 12:56

en/statpqpl/hipotezypl.txt · ostatnio zmienione: 2022/02/11 12:37 przez admin

Narzędzia strony

Wszystkie treści w tym wiki, którym nie przyporządkowano licencji, podlegają licencji: CC Attribution-Noncommercial-Share Alike 4.0 International

.

.