Narzędzia użytkownika

Narzędzia witryny

Pasek boczny

en:statpqpl:korelpl:parpl:rppl

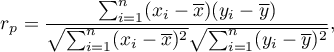

The linear correlation coefficients

The Pearson product-moment correlation coefficient  called also the Pearson's linear correlation coefficient (Pearson (1896,1900)) is used to decribe the strength of linear relations between 2 features. It may be calculated on an interval scale as long as there are no measurement outliers and the distribution of residuals or the distribution of the analyed features is a normal one.

called also the Pearson's linear correlation coefficient (Pearson (1896,1900)) is used to decribe the strength of linear relations between 2 features. It may be calculated on an interval scale as long as there are no measurement outliers and the distribution of residuals or the distribution of the analyed features is a normal one.

where:

- the following values of the feature

- the following values of the feature  and

and  ,

,

- means values of features:

- means values of features:  and

and  ,

,

- sample size.

- sample size.

Note

– the Pearson product-moment correlation coefficient in a population;

– the Pearson product-moment correlation coefficient in a population;

– the Pearson product-moment correlation coefficient in a sample.

– the Pearson product-moment correlation coefficient in a sample.

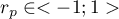

The value of  , and it should be interpreted the following way:

, and it should be interpreted the following way:

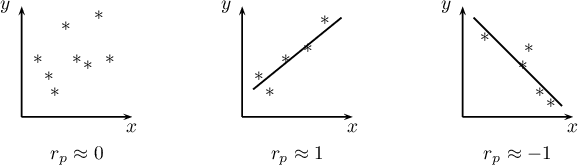

means a strong positive linear correlation – measurement points are closed to a straight line and when the independent variable increases, the dependent variable increases too;

means a strong positive linear correlation – measurement points are closed to a straight line and when the independent variable increases, the dependent variable increases too; means a strong negative linear correlation – measurement points are closed to a straight line, but when the independent variable increases, the dependent variable decreases;

means a strong negative linear correlation – measurement points are closed to a straight line, but when the independent variable increases, the dependent variable decreases;- if the correlation coefficient is equal to the value or very closed to zero, there is no linear dependence between the analysed features (but there might exist another relation - a not linear one).

Graphic interpretation of  .

.

If one out of the 2 analysed features is constant (it does not matter if the other feature is changed), the features are not dependent from each other. In that situation  can not be calculated.

can not be calculated.

Note

You are not allowed to calculate the correlation coefficient if: there are outliers in a sample (they may make that the value and the sign of the coefficient would be completely wrong), if the sample is clearly heterogeneous, or if the analysed relation takes obviously the other shape than linear.

The coefficient of determination:  – reflects the percentage of a dependent variable a variability which is explained by variability of an independent variable.

– reflects the percentage of a dependent variable a variability which is explained by variability of an independent variable.

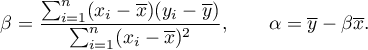

A created model shows a linear relationship:

and

and  coefficients of linear regression equation can be calculated using formulas:

coefficients of linear regression equation can be calculated using formulas:

EXAMPLE cont. (age-height.pqs file)

en/statpqpl/korelpl/parpl/rppl.txt · ostatnio zmienione: 2022/02/13 18:45 przez admin

Narzędzia strony

Wszystkie treści w tym wiki, którym nie przyporządkowano licencji, podlegają licencji: CC Attribution-Noncommercial-Share Alike 4.0 International