Analysis of model residuals

To obtain a correct regression model we should check the basic assumptions concerning model residuals.

- Outliers

The study of the model residual can be a quick source of knowledge about outlier values. Such observations can disturb the equation of the regression to a large extent because they have a great effect on the values of the coefficients in the equation. If the given residual  deviates by more than 3 standard deviations from the mean value, such an observation can be classified as an outlier. A removal of an outlier can greatly enhance the model.

deviates by more than 3 standard deviations from the mean value, such an observation can be classified as an outlier. A removal of an outlier can greatly enhance the model.

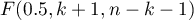

Cook's distance - describes the magnitude of change in regression coefficients produced by omitting a case. In the program, Cook's distances for cases that exceed the 50th percentile of the F-Snedecor distribution statistic are highlighted in bold  .

.

Mahalanobis distance - is dedicated to detecting outliers - high values indicate that a case is significantly distant from the center of the independent variables. If a case with the highest Mahalanobis value is found among the cases more than 3 deviations away, it will be marked in bold as the outlier.

- Normalność rozkładu reszt modelu

We check this assumption visually using a Q-Q plot of the nromal distribution. The large difference between the distribution of the residuals and the normal distribution may disturb the assessment of the significance of the coefficients of the individual variables in the model.

- Homoscedasticity (homogeneity of variance)

To check if there are areas in which the variance of model residuals is increased or decreased we use the charts of:

- the residual with respect to predicted values

- the square of the residual with respect to predicted values

- the residual with respect to observed values

- the square of the residual with respect to observed values

- Autocorrelation of model residuals

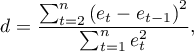

For the constructed model to be deemed correct the values of residuals should not be correlated with one another (for all pairs  ). The assumption can be checked by by computing the Durbin-Watson statistic.

). The assumption can be checked by by computing the Durbin-Watson statistic.

To test for positive autocorrelation on the significance level  we check the position of the statistics

we check the position of the statistics  with respect to the upper (

with respect to the upper ( ) and lower (

) and lower ( ) critical value:

) critical value:

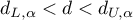

- If

– the errors are positively correlated;

– the errors are positively correlated; - If

– the errors are not positively correlated;

– the errors are not positively correlated; - If

– the test result is ambiguous.

– the test result is ambiguous.

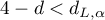

To test for negative autocorrelation on the significance level  we check the position of the value

we check the position of the value  with respect to the upper (

with respect to the upper ( ) and lower (

) and lower ( ) critical value:

) critical value:

- If

– the errors are negatively correlated;

– the errors are negatively correlated; - If

– the errors are not negatively correlated;

– the errors are not negatively correlated; - If

– the test result is ambiguous.

– the test result is ambiguous.

The critical values of the Durbin-Watson test for the significance level  are on the website (pqstat) – the source of the: Savin and White tables (1977)1)

are on the website (pqstat) – the source of the: Savin and White tables (1977)1)

EXAMPLE cont. (publisher.pqs file)